If you work in a regulated environment, you are probably more than aware of the terms validation, calibration, verification, process validation, prospective validation, concurrent validation and so on. In this article, I will explain all of these terms and more thus providing a clear outline of the differences.

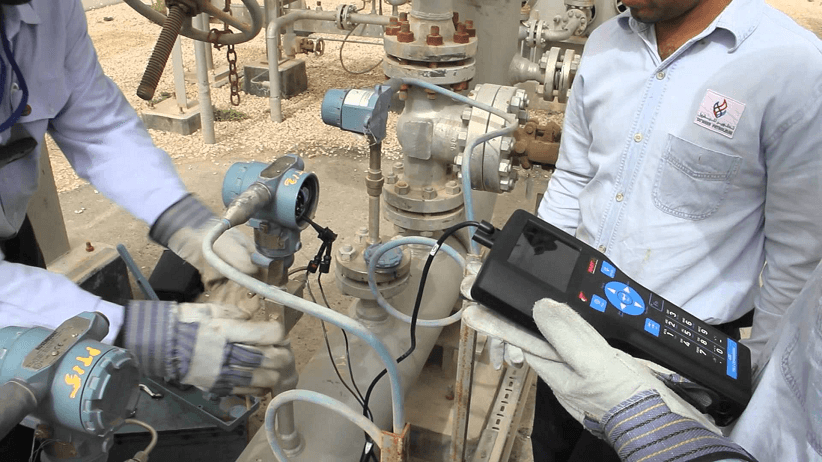

Calibration

Instrument calibration is a process that provides corrections which make a logger accurate. Calibration services are based on the process of comparison with a reference or standard in order to give set corrections and/or uncertainties characteristic of the instrument being calibrated. The results of the calibration process may be then used in the adjustment of the instrument.

Validation

Validation is used to give information regarding the accuracy or inaccuracy of the logger. It is a check to confirm that the instrument fits the specifications required for some particular use, that is, what a laboratory may do as part of its own procedure to determine whether a specific instrument can be used for a specific purpose.

Verification

This is the process of checking against a standard or reference to confirm that an instrument fits the broad and specific specs provided by its manufacturer.

Process of Equipment Validation

Process validation is part of the requirements for Good Manufacturing Practices (GMPs) for medical devices and pharmaceuticals as established by the FDA, and involves a range of activities which occur throughout the lifecycle of a product and its process. It involves three main stages namely design, qualification and continued validation and verification.

The process of validation is intended to give users of some equipment or process that it will produce some expected product consistently according to preset quality attributes, acceptance criteria and specifications. There are many ways to carry out validation, but the most common forms of equipment validation are provided below.

Prospective Validation

An approach involved with establishment of documentary evidence before process implementation. The documentation confirms that the system actually performs according to the propositions for it and the pre-written protocols. This approach is taken, for instance, to validate processes for new formulations or within new facilities, which must be done before commencement of routine production.

Retrospective Validation

It is applied to process controls, process and/or facilities which have yet to undergo formal, documented validation procedures. The validation is carried out using historical data to give evidence to support the hypothesis that a process is doing what it was intended to do. It can only be used for properly established processes, and is not ideal where there have been recent changes in processes, equipment and/or products in a production line.

Today, retrospective validation is only used for audits of validated processes, given that it’s unlikely to still have products whose processes haven’t been subjected to prospective validation.

Concurrent Validation

It is used to provide documentary evidence that facilities and/or processes actually work the way they are said to be working, according to data generated in the course of actual process implementation. In this process, critical processing steps are monitored and end products from the current production line will be tested to prove that the process of manufacture is running in a controlled state.

Revalidation

Like the prefix suggests, this is about repeating original validation efforts, including investigative appraisal of existing performance data. The process is important for the maintenance of a validated status on facilities, processes, systems and equipment.

Analytical Method Validation Parameters

Analytical methods validation is the process through which the performance characteristics of a method fulfill the specifications for the predetermined analytical purposes, done through specific lab studies.

Validation parameter descriptions are outlined in the “ICH Guidelines for Industry (Text on Analytical Procedures)” and in Chapter 1225 of the “USP General”. It provides information on the parameter, how it is determined and how it may be used in the validation process according to the intended use of the method.

Accuracy

The accuracy of any analytical method refers to the degree the test results the method generates agree with the true value. It can also be said to be the closeness between the true value and the value achieved. There are different methods to obtain the true value, and accuracy of a method can be obtained by analyzing samples of known concentrations before comparing them to the true value.

Linearity

This refers to the ability of an analytical method to produce test results in direct proportion to the concentration of analytes within samples for a given range, or proportions as defined by some mathematical transformation. For quality assurance, linearity may be measured through dilution of the test substance directly, or some proposed procedure using separate synthetic mixture weighings.

Limit of Detection

This is the point at which a method’s measured value becomes larger than the uncertainty with which it is associated i.e. the lowest concentration of analyte within a sample that can enable detection (not necessarily quantification). It is frequently confused with sensitivity of a method, which is ability to distinguish very small differences in mass of concentration of test analytes.

Limit of Quantitation

This is the minimum amount of test analyte in a sample which can produce quantitative measurements within a target matrix with an acceptable level of precision.

Ruggedness

The definition of ruggedness has been replaced by reproducibility, which is defined in the USP General as the degree of reproducibility of results gotten in varying conditions e.g. difference in analysts, laboratories, environmental conditions, materials, operators and instruments. It is a measure that determines the level of reproducibility of test results in expected/normal operational conditions in different labs and carried out by different analysts.

Robustness

This is a measure of the effect of operational parameters on a method’s analysis results. To do this, some method parameters are varied in representative range, and the variable’s quantitative influence is determined. If any parameter’s influence remains within a formerly specified tolerance range, that parameter is described as being within the robustness range of that method.

Precision

It refers to the level of variation in results expressed using standard deviation and/or the coefficient of variation. It also refers to the degree of scatter or closeness of agreement within a series of measurements obtained following repeated sampling of a homogenous sample within similar conditions.

The Takeaway

Calibration and validation services are important since they help maintain the quality, safety and standards of your equipment, which lowers costs associated with their operation and increases the efficiency thereof. Calibration, verification and validation checks are critical to ensuring that your processes and equipment are functioning the way they were intended to.